Flippant Remarks about Windows Azure

As of today I’m sticking with Windows Azure. Besides the obvious Mark-Russinovich-in-the-cloud-thing, two features of Azure keep me interested: (i) Table Storage as the scalable data source driving OData feeds for all Songhay System web sites and (ii) Blob Storage of Silverlight (and Flex) sites for all Songhay System rich-media experiences.

Maybe a month ago I was very excited about Azure Web roles. However, when the first bill for having an idle Microsoft cloud web site came in, my excitement faded fast and I sent wild Twitter messages to Steve Marx. I should have done my research but I was more into the coding than into the penny pinching. I was not the only one. Take Paul Mehner:

To give you some idea, my Windows Azure bill has been running over $500 per month for four hosted services and four storage services (plus a few extra instances in staging environments). This is for mostly idle instances (used for demo and training purposes). There are many variables in pricing outside the scope of this short blog post, so your costs could be much different. My purpose in drawing your attention to it here is to give you some financial sense as to why I view the information in this blog post important.

Chris Pietschmann hits closer to home:

If your application is racking up “Compute” time whenever it is “live”, then that equals a total of approximately 720 hours of “compute” time for a total of $86.40 per month.

In “What I Would Change about Microsoft Windows Azure” the problem I’m having with Azure is excellently summarized:

The Windows Azure deployment model works for big businesses with large numbers of users, or for Web sites which huge spikes in demand. For small businesses though, we would end up paying for a lot hardware that is completely under-utilized. I thought the cloud was supposed to allow me to pay for only the hardware I needed at any moment in time.

I was wondering why, say, Carl Franklin—and Netflix for that matter—passed on Windows Azure. Many dismiss it with, “The conclusion for Windows Azure is that it’s too expensive…” My flippant response to this reality, in spite of me charge-carding upwards of $200 bucks (over the last two months) comes in these points:

- I think we all have underestimated Windows Azure Table Storage as a complete replacement for SQL Azure (which costs ~$9/month per instance). Yes, I know this can sound crazy—and actually be crazy.

- I’ve just joined the Extra Small Compute Instance beta program. Which is supposed to cut my Web role costs over 50% (from 12¢/hour to 5¢/hour).### Flippant Remarks about Windows Azure Table Storage

In “Azure Table Storage, what a pain in the ass,” Oliver Jones writes:

Microsoft have wrapped this in the ADO.NET Data Services API. So it looks fairly full featured. However it is not. At almost every turn I have ended up bashing my head against a Table Storage limitation. Debuging these problems has been a bit of a nightmare.

My chief problem is that Windows Azure Table Storage does not let you store data of “any type you want” (which is what I swear I heard somewhere). There are a limited number of types supported in Azure Table Storage name-value pairs. The list of supported types in Jim White’s “Windows Azure Table Storage vs. Windows SQL Azure” does not include Nullable types as of this writing—I appear to be using Nullable types just fine as of this writing.

I was fully expecting to persist POCO Entity Framework types as Azure Table Storage objects. Expectations like these are less frequent but they can still sting quite a bit. So what has actually happened is a situation that makes Ruby people scoff: for every POCO type Foo, I’m going through the “ceremony” of defining a corresponding type, TableStorageFoo. For example, here is the “noise” for my TableStorageDocument:public class TableStorageDocument : TableServiceEntity, IDocument

{

//more noise, noise, noise…

}What is implied here is that IDocument is an interface extracted from my POCO Entity Framework type Document. This implication reveals yet another stinging expectation that the latest version of Entity Framework should prevent me from needing an interface this way.

(Hold on. Let me get seriously incoherent for a moment: It is possible to persist POCO entities in Azure Table Storage as serialized strings (XML). However, this .NET serialization process would require a .NET-aware deserialization layer—this adds complexity and makes the whole OData/JSON access story slightly miserable but not impossible.)

(By the way: I have written a little utility, FrameworkTypeUtility.SetProperties, which uses reflection to set properties of the same name. This means that passing in an instance of IDocument into the constructor of TableStorageDocument does not force me to set properties ‘manually’:public TableStorageDocument(IDocument baseDocument)

{

FrameworkTypeUtility.SetProperties(baseDocument, this);

//noise, ceremony, syntactic sugar, etc.

}I am sure I’m not the first dude on the planet to “discover” this use of Reflection and there are surely a couple of open source .NET projects based on this use of Reflection.)

All of this work I’m doing to get Azure Table Storage to ‘work’ might be a symptom of what Rockford Lhotka writes about in “Some thoughts on Windows Azure”:

But there’s also the lock-in question. If I built my application for Azure, Microsoft has made it very clear that I will not be able to run my app on my servers. If I need to downsize, or scale back, I really can’t. Once you go to Azure, you are there permanently. I suspect this will be a major sticking point for many organizations. I’ve seen quotes by Microsoft people suggesting that we should all factor our applications into “the part we host” and “the part they host”. But even assuming we're all willing to go to that work, and introduce that complexity, this still means that part of my app can never run anywhere but on Microsoft’s servers.

So it’s clear that what Rocky—or someone else just as cool as Rocky—has to do for me is build a conventions/attributes/magic-based persistence layer between my server-independent POCOs and Azure Table Storage. This is far from impossible and it may actually come from Microsoft (because the Entity Framework team might find this useful). I’ll be looking out for it. Until then, I am literally writing two sets of classes for one data access object.

But this situation is not terrible because I use less than ten data access objects for all of my personal projects—and I currently have no clients/jobs demanding custom Azure-based solutions.

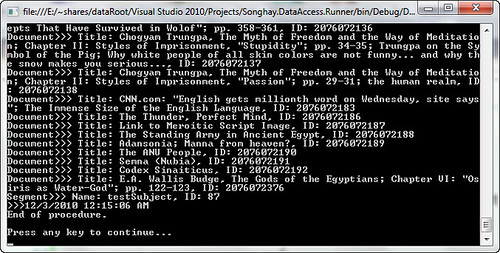

The reason why I use so few data objects is because my data, Songhay System data, model the document concept. Modeling around the document is quite open as documents are inherently free flowing. It is my document-centric bias that makes Azure Table Storage so attractive to me. I have the expectation that I can store relatively static documents in the cloud, based on a single OData-based access solution. This single document-storage solution should drive all of my document-based projects. This is one of many reasons why my little company is called Songhay* System—*the word system is singular.

Single point failure for a single cloud owned by a single company is not impossible but hardly individual—more conspiratorial…

Single point failure for a single cloud owned by a single company is not impossible but hardly individual—more conspiratorial…